By Steve Endow

Last week a client asked me to add a new field to their AP Invoice Import. They wanted to import a unique transaction ID for their AP invoices, which were being generated by their operational system.

They were already using the Document Number field and the PO Number field. So that left them with the AP Invoice Note field. Since the unique transaction ID didn't need to be queried or searched within GP, the Note field, while not ideal, would work for them.

But there was one problem.

When I tried to modify my application to import the new value into the AP Invoice Note field, there was no NOTETEXT eConnect node. That's odd.

I pulled up the handy eConnect Programmer's Guide, jumped to the taPMTransactionInsert documentation, and searched for "note".

No matches found. What? Something must be wrong. Clearly the documentation is incorrect.

Then I checked another eConnect help file. No note. Then I checked the taPMTransactionInsert stored procedure. No NOTETEXT parameter.

So, it would seem that the summer intern who developed the taPMTransactionInsert eConnect method forgot to include the transaction note. I can't imagine any valid reason why you can't import a Note for an AP Invoice. And it's unbelievable that eConnect has been around this long and the Note field still hasn't been added.

Although admittedly, it would seem that this is the first time I've ever needed to import an AP Invoice Note, as I don't recall noticing this issue before.

So... What does one do when eConnect doesn't have what you need? I call it "off roading".

But what will we need to do in order to import a note for a transaction that we are importing via eConnect? Well, it's a bit of a hassle. Not feeling eager to write the code to insert a note, I installed WinGrep on one of my development servers and started searching through my Visual Studio source code files to see if I could find anything.

And behold, I found it. Back in 2012, I apparently wrote an integration that had to insert notes for cash receipt transactions. And guess what. eConnect does not support Notes for cash receipts! The same summer intern must have coded taRMCashReceiptInsert as well.

So, here is the code that I wrote to insert notes for AP Invoices imported with eConnect.

And yes, I know that you could also use the eConnect Post stored procedure, but I don't like them for three good reasons.

1. Debugging SQL stored procedures is a pain compared to debugging C# in Visual Studio

2. Putting custom logic in a Post stored procedure means having code in an additional location

3. When you apply a GP update or new release, the eConnect Pre and Post procs are typically dropped, and nobody ever remembers to re-script the procs. Been there!

Yes, I am using a stored procedure to insert and update the note, but that proc is very granular and will not be dropped by a GP service pack or new release. You could always move the note insert proc into C#, but I don't see much benefit of doing so.

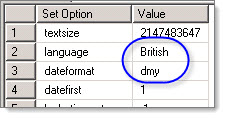

First, there is the stored procedure to perform the insert or update of the note. It even checks which version of GP is being used and appends the appropriate line break.

IF EXISTS(SELECT * FROM sys.objects WHERE type = 'P' AND name = 'csspInsertUpdateNote')

DROP PROCEDURE csspInsertUpdateNote

GO

CREATE PROCEDURE csspInsertUpdateNote

@NOTEINDX AS int,

@NOTETEXT varchar(8000)

AS

BEGIN

--SET NOCOUNT ON;

DECLARE @TXTFIELD varbinary(16);

DECLARE @LENGTH int;

DECLARE @CRLF varchar(10);

DECLARE @APPEND varchar(8000);

DECLARE @GPVERSION int;

--GP 2010+ uses CRLF for notes, whereas GP 9 and 10 use CR only

SELECT TOP 1 @GPVERSION = versionMajor FROM DYNAMICS..DU000020 WHERE COMPANYID = -32767 AND PRODID = 0

IF @GPVERSION >= 11

SET @CRLF = CHAR(13)+CHAR(10);

ELSE

SET @CRLF = CHAR(13);

SET @APPEND = @CRLF + @NOTETEXT;

--Check if a note record exists

IF (SELECT COUNT(*) FROM SY03900 WHERE NOTEINDX = @NOTEINDX) = 0

BEGIN

--If not, insert a new note record with the update

INSERT INTO SY03900 (NOTEINDX, DATE1, TIME1, TXTFIELD) VALUES (@NOTEINDX, DATEADD(dd, 0, DATEDIFF(dd, 0, GETDATE())), CONVERT(VARCHAR(8),GETDATE(),108), @NOTETEXT)

END

ELSE

BEGIN

--If so, update the existing note

--Get the text pointer for the service call note

SELECT @TXTFIELD = TEXTPTR(TXTFIELD), @LENGTH = DATALENGTH(TXTFIELD) FROM SY03900 WHERE NOTEINDX = @NOTEINDX;

UPDATETEXT SY03900.TXTFIELD @TXTFIELD @LENGTH 0 @APPEND;

END

END

GO

GRANT EXEC ON csspInsertUpdateNote TO DYNGRP

GO

Next, there is the C# data access method to call the stored proc.

public static bool InsertUpdateNote(string gpDatabase, decimal noteIndex, string noteUpdate)

{

string commandText = "csspInsertUpdateNote";

SqlParameter[] sqlParameters = new SqlParameter[2];

sqlParameters[0] = new SqlParameter("@NOTEINDX", System.Data.SqlDbType.Decimal);

sqlParameters[0].Value = noteIndex;

sqlParameters[1] = new SqlParameter("@NOTETEXT", System.Data.SqlDbType.VarChar, 8000);

sqlParameters[1].Value = noteUpdate.Trim();

int records = 0;

try

{

records = ExecuteNonQuery(gpDatabase, CommandType.StoredProcedure, commandText, ref sqlParameters);

if (records == 1)

{

return true;

}

else

{

return false;

}

}

catch (Exception ex)

{

Log.Write("An unexpected error occurred in InsertUpdateNote: " + ex.Message, true);

return false;

}

}

Here is a helper data access method to get the note index for a voucher. (The ExecuteScalar method is my own data access wrapper to perform an execute scalar operation.)

public static int GetAPInvoiceNoteIndex(string gpDatabase, string vendorID, string voucherNum)

{

string commandText = "SELECT TOP 1 NOTEINDX FROM PM10000 WHERE VENDORID = @VENDORID AND VCHNUMWK = @VCHNUMWK AND DOCTYPE = 1 ORDER BY DEX_ROW_ID DESC";

SqlParameter[] sqlParameters = new SqlParameter[2];

sqlParameters[0] = new SqlParameter("@VENDORID", System.Data.SqlDbType.VarChar, 15);

sqlParameters[0].Value = vendorID.Trim();

sqlParameters[1] = new SqlParameter("@VCHNUMWK", System.Data.SqlDbType.VarChar, 17);

sqlParameters[1].Value = voucherNum.Trim();

string result = string.Empty;

try

{

result = ExecuteScalar(gpDatabase, CommandType.Text, commandText, sqlParameters);

return Convert.ToInt32(Convert.ToDecimal(result));

}

catch (Exception ex)

{

Log.Write("An unexpected error occurred in GetAPInvoiceNoteIndex: " + ex.Message, true);

return 0;

}

}

eConnect appears to automatically assign a Note Index to AP invoices, so you don't have to get the next index or assign it, but in case it is of interest, here is my data access method to get the next note index. (The ExecuteNonQuery method is my own data access wrapper)

public static decimal GetNextNoteIndex(string gpDatabase)

{

string commandText = "SELECT CMPANYID FROM DYNAMICS.dbo.SY01500 WHERE INTERID = @INTERID";

SqlParameter[] sqlParameters = new SqlParameter[1];

sqlParameters[0] = new SqlParameter("@INTERID", System.Data.SqlDbType.VarChar, 5);

sqlParameters[0].Value = gpDatabase;

string result = ExecuteScalar(gpDatabase, CommandType.Text, commandText, sqlParameters);

int companyID = int.Parse(result);

//Call the smGetNextNoteIndex proc in the Dynamics DB

commandText = "DYNAMICS.dbo.smGetNextNoteIndex";

sqlParameters = new SqlParameter[4];

sqlParameters[0] = new SqlParameter("@I_sCompanyID", System.Data.SqlDbType.SmallInt);

sqlParameters[0].Value = companyID;

sqlParameters[1] = new SqlParameter("@I_iSQLSessionID", System.Data.SqlDbType.Int);

sqlParameters[1].Value = 1;

sqlParameters[2] = new SqlParameter("@O_mNoteIndex", System.Data.SqlDbType.Decimal);

sqlParameters[2].Direction = ParameterDirection.Output;

sqlParameters[2].Value = 0;

sqlParameters[3] = new SqlParameter("@O_iErrorState", System.Data.SqlDbType.Int);

sqlParameters[3].Direction = ParameterDirection.Output;

sqlParameters[3].Value = 0;

decimal noteIndex = 0;

int recordCount = ExecuteNonQuery(gpDatabase, CommandType.StoredProcedure, commandText, ref sqlParameters);

noteIndex = decimal.Parse(sqlParameters[2].Value.ToString());

return noteIndex;

}

I then have a data access method to get the note index of the AP invoice and update the note.

public static bool AppendAPInvoiceNote(string gpDatabase, string vendorID, string voucherNum, string noteText)

{

bool newNote = false;

decimal noteIndex = GetAPInvoiceNoteIndex(gpDatabase, vendorID, voucherNum);

if (noteIndex == 0)

{

newNote = true;

noteIndex = GetNextNoteIndex(gpDatabase);

}

bool success = InsertUpdateNote(gpDatabase, noteIndex, noteText);

if (success == false)

return false;

if (newNote)

success = AssignAPInvoiceNote(gpDatabase, vendorID, voucherNum, noteIndex);

return success;

}

And finally, I call the AppendAPInvoiceNote method after successfully importing each invoice.

success = DataAccess.AppendAPInvoiceNote(gpDatabase, vendorID, voucherNum, noteText);

if (success == false)

{

Log.Write("Failed to save Note for Vendor " + vendorID + " transaction " + voucherNum, true);

}

Pretty straightforward, but I am so glad I didn't have to write that from scratch...again, particularly because this was an urgent request and I just didn't have the time available. That would have been a fair amount of research and coding just to insert a Note. That eConnect should import for me.

Last week a client asked me to add a new field to their AP Invoice Import. They wanted to import a unique transaction ID for their AP invoices, which were being generated by their operational system.

They were already using the Document Number field and the PO Number field. So that left them with the AP Invoice Note field. Since the unique transaction ID didn't need to be queried or searched within GP, the Note field, while not ideal, would work for them.

But there was one problem.

When I tried to modify my application to import the new value into the AP Invoice Note field, there was no NOTETEXT eConnect node. That's odd.

I pulled up the handy eConnect Programmer's Guide, jumped to the taPMTransactionInsert documentation, and searched for "note".

No matches found. What? Something must be wrong. Clearly the documentation is incorrect.

Then I checked another eConnect help file. No note. Then I checked the taPMTransactionInsert stored procedure. No NOTETEXT parameter.

So, it would seem that the summer intern who developed the taPMTransactionInsert eConnect method forgot to include the transaction note. I can't imagine any valid reason why you can't import a Note for an AP Invoice. And it's unbelievable that eConnect has been around this long and the Note field still hasn't been added.

Although admittedly, it would seem that this is the first time I've ever needed to import an AP Invoice Note, as I don't recall noticing this issue before.

So... What does one do when eConnect doesn't have what you need? I call it "off roading".

But what will we need to do in order to import a note for a transaction that we are importing via eConnect? Well, it's a bit of a hassle. Not feeling eager to write the code to insert a note, I installed WinGrep on one of my development servers and started searching through my Visual Studio source code files to see if I could find anything.

And behold, I found it. Back in 2012, I apparently wrote an integration that had to insert notes for cash receipt transactions. And guess what. eConnect does not support Notes for cash receipts! The same summer intern must have coded taRMCashReceiptInsert as well.

So, here is the code that I wrote to insert notes for AP Invoices imported with eConnect.

And yes, I know that you could also use the eConnect Post stored procedure, but I don't like them for three good reasons.

1. Debugging SQL stored procedures is a pain compared to debugging C# in Visual Studio

2. Putting custom logic in a Post stored procedure means having code in an additional location

3. When you apply a GP update or new release, the eConnect Pre and Post procs are typically dropped, and nobody ever remembers to re-script the procs. Been there!

Yes, I am using a stored procedure to insert and update the note, but that proc is very granular and will not be dropped by a GP service pack or new release. You could always move the note insert proc into C#, but I don't see much benefit of doing so.

First, there is the stored procedure to perform the insert or update of the note. It even checks which version of GP is being used and appends the appropriate line break.

IF EXISTS(SELECT * FROM sys.objects WHERE type = 'P' AND name = 'csspInsertUpdateNote')

DROP PROCEDURE csspInsertUpdateNote

GO

CREATE PROCEDURE csspInsertUpdateNote

@NOTEINDX AS int,

@NOTETEXT varchar(8000)

AS

BEGIN

--SET NOCOUNT ON;

DECLARE @TXTFIELD varbinary(16);

DECLARE @LENGTH int;

DECLARE @CRLF varchar(10);

DECLARE @APPEND varchar(8000);

DECLARE @GPVERSION int;

--GP 2010+ uses CRLF for notes, whereas GP 9 and 10 use CR only

SELECT TOP 1 @GPVERSION = versionMajor FROM DYNAMICS..DU000020 WHERE COMPANYID = -32767 AND PRODID = 0

IF @GPVERSION >= 11

SET @CRLF = CHAR(13)+CHAR(10);

ELSE

SET @CRLF = CHAR(13);

SET @APPEND = @CRLF + @NOTETEXT;

--Check if a note record exists

IF (SELECT COUNT(*) FROM SY03900 WHERE NOTEINDX = @NOTEINDX) = 0

BEGIN

--If not, insert a new note record with the update

INSERT INTO SY03900 (NOTEINDX, DATE1, TIME1, TXTFIELD) VALUES (@NOTEINDX, DATEADD(dd, 0, DATEDIFF(dd, 0, GETDATE())), CONVERT(VARCHAR(8),GETDATE(),108), @NOTETEXT)

END

ELSE

BEGIN

--If so, update the existing note

--Get the text pointer for the service call note

SELECT @TXTFIELD = TEXTPTR(TXTFIELD), @LENGTH = DATALENGTH(TXTFIELD) FROM SY03900 WHERE NOTEINDX = @NOTEINDX;

UPDATETEXT SY03900.TXTFIELD @TXTFIELD @LENGTH 0 @APPEND;

END

END

GO

GRANT EXEC ON csspInsertUpdateNote TO DYNGRP

GO

Next, there is the C# data access method to call the stored proc.

public static bool InsertUpdateNote(string gpDatabase, decimal noteIndex, string noteUpdate)

{

string commandText = "csspInsertUpdateNote";

SqlParameter[] sqlParameters = new SqlParameter[2];

sqlParameters[0] = new SqlParameter("@NOTEINDX", System.Data.SqlDbType.Decimal);

sqlParameters[0].Value = noteIndex;

sqlParameters[1] = new SqlParameter("@NOTETEXT", System.Data.SqlDbType.VarChar, 8000);

sqlParameters[1].Value = noteUpdate.Trim();

int records = 0;

try

{

records = ExecuteNonQuery(gpDatabase, CommandType.StoredProcedure, commandText, ref sqlParameters);

if (records == 1)

{

return true;

}

else

{

return false;

}

}

catch (Exception ex)

{

Log.Write("An unexpected error occurred in InsertUpdateNote: " + ex.Message, true);

return false;

}

}

Here is a helper data access method to get the note index for a voucher. (The ExecuteScalar method is my own data access wrapper to perform an execute scalar operation.)

public static int GetAPInvoiceNoteIndex(string gpDatabase, string vendorID, string voucherNum)

{

string commandText = "SELECT TOP 1 NOTEINDX FROM PM10000 WHERE VENDORID = @VENDORID AND VCHNUMWK = @VCHNUMWK AND DOCTYPE = 1 ORDER BY DEX_ROW_ID DESC";

SqlParameter[] sqlParameters = new SqlParameter[2];

sqlParameters[0] = new SqlParameter("@VENDORID", System.Data.SqlDbType.VarChar, 15);

sqlParameters[0].Value = vendorID.Trim();

sqlParameters[1] = new SqlParameter("@VCHNUMWK", System.Data.SqlDbType.VarChar, 17);

sqlParameters[1].Value = voucherNum.Trim();

string result = string.Empty;

try

{

result = ExecuteScalar(gpDatabase, CommandType.Text, commandText, sqlParameters);

return Convert.ToInt32(Convert.ToDecimal(result));

}

catch (Exception ex)

{

Log.Write("An unexpected error occurred in GetAPInvoiceNoteIndex: " + ex.Message, true);

return 0;

}

}

eConnect appears to automatically assign a Note Index to AP invoices, so you don't have to get the next index or assign it, but in case it is of interest, here is my data access method to get the next note index. (The ExecuteNonQuery method is my own data access wrapper)

public static decimal GetNextNoteIndex(string gpDatabase)

{

string commandText = "SELECT CMPANYID FROM DYNAMICS.dbo.SY01500 WHERE INTERID = @INTERID";

SqlParameter[] sqlParameters = new SqlParameter[1];

sqlParameters[0] = new SqlParameter("@INTERID", System.Data.SqlDbType.VarChar, 5);

sqlParameters[0].Value = gpDatabase;

string result = ExecuteScalar(gpDatabase, CommandType.Text, commandText, sqlParameters);

int companyID = int.Parse(result);

//Call the smGetNextNoteIndex proc in the Dynamics DB

commandText = "DYNAMICS.dbo.smGetNextNoteIndex";

sqlParameters = new SqlParameter[4];

sqlParameters[0] = new SqlParameter("@I_sCompanyID", System.Data.SqlDbType.SmallInt);

sqlParameters[0].Value = companyID;

sqlParameters[1] = new SqlParameter("@I_iSQLSessionID", System.Data.SqlDbType.Int);

sqlParameters[1].Value = 1;

sqlParameters[2] = new SqlParameter("@O_mNoteIndex", System.Data.SqlDbType.Decimal);

sqlParameters[2].Direction = ParameterDirection.Output;

sqlParameters[2].Value = 0;

sqlParameters[3] = new SqlParameter("@O_iErrorState", System.Data.SqlDbType.Int);

sqlParameters[3].Direction = ParameterDirection.Output;

sqlParameters[3].Value = 0;

decimal noteIndex = 0;

int recordCount = ExecuteNonQuery(gpDatabase, CommandType.StoredProcedure, commandText, ref sqlParameters);

noteIndex = decimal.Parse(sqlParameters[2].Value.ToString());

return noteIndex;

}

I then have a data access method to get the note index of the AP invoice and update the note.

public static bool AppendAPInvoiceNote(string gpDatabase, string vendorID, string voucherNum, string noteText)

{

bool newNote = false;

decimal noteIndex = GetAPInvoiceNoteIndex(gpDatabase, vendorID, voucherNum);

if (noteIndex == 0)

{

newNote = true;

noteIndex = GetNextNoteIndex(gpDatabase);

}

bool success = InsertUpdateNote(gpDatabase, noteIndex, noteText);

if (success == false)

return false;

if (newNote)

success = AssignAPInvoiceNote(gpDatabase, vendorID, voucherNum, noteIndex);

return success;

}

And finally, I call the AppendAPInvoiceNote method after successfully importing each invoice.

success = DataAccess.AppendAPInvoiceNote(gpDatabase, vendorID, voucherNum, noteText);

if (success == false)

{

Log.Write("Failed to save Note for Vendor " + vendorID + " transaction " + voucherNum, true);

}

Pretty straightforward, but I am so glad I didn't have to write that from scratch...again, particularly because this was an urgent request and I just didn't have the time available. That would have been a fair amount of research and coding just to insert a Note. That eConnect should import for me.

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.